Stable diffusion huggingface

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints fasterstable diffusion huggingface, more memory efficientand more performant. This notebook walks you through the improvements one-by-one so you can best leverage StableDiffusionPipeline for inference.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. You can do so by telling diffusers to expect the weights to be in float16 precision:. Note : If you are limited by TPU memory, please make sure to load the FlaxStableDiffusionPipeline in bfloat16 precision instead of the default float32 precision as done above. You can do so by telling diffusers to load the weights from "bf16" branch.

Stable diffusion huggingface

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Resumed for another k steps on x images. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository. Running the pipeline if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to EulerDiscreteScheduler :. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes. The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model. Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:. While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

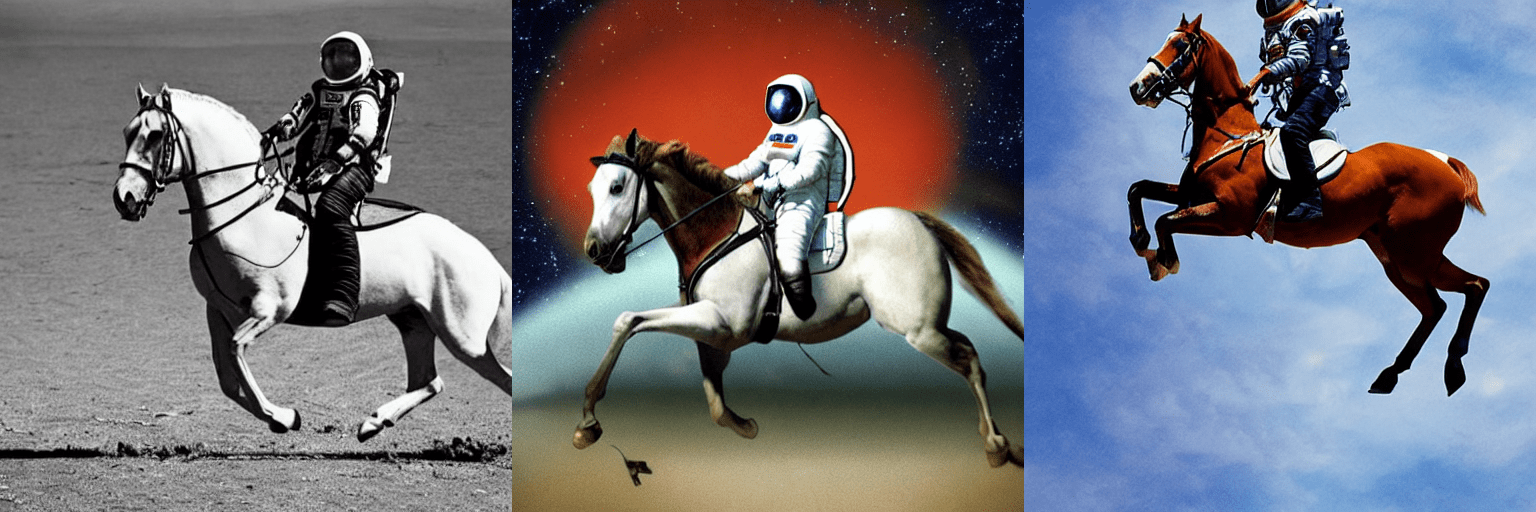

Evaluations with different classifier-free guidance scales 1.

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. For more detailed instructions, use-cases and examples in JAX follow the instructions here. Follow instructions here. Model Description: This is a model that can be used to generate and modify images based on text prompts. Resources for more information: GitHub Repository , Paper. The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people.

This repository contains Stable Diffusion models trained from scratch and will be continuously updated with new checkpoints. The following list provides an overview of all currently available models. More coming soon. Instructions are available here. New stable diffusion model Stable Diffusion 2.

Stable diffusion huggingface

This model card focuses on the model associated with the Stable Diffusion v2 model, available here. This stable-diffusion-2 model is resumed from stable-diffusionbase base-ema. Resumed for another k steps on x images.

Weber stand bcf

Training Training Data The model developers used the following dataset for training the model: LAION-2B en and subsets thereof see next section Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. A basic crash course for learning how to use the library's most important features like using models and schedulers to build your own diffusion system, and training your own diffusion model. You signed in with another tab or window. These tips are applicable to all Stable Diffusion pipelines. Not optimized for FID scores. If you are looking for the weights to be loaded into the CompVis Stable Diffusion codebase, come here. While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases. The table below summarizes the available Stable Diffusion pipelines, their supported tasks, and an interactive demo:. Interchangeable noise schedulers for different diffusion speeds and output quality. Stable Diffusion pipelines Tips Explore tradeoff between speed and quality Reuse pipeline components to save memory. Stable Diffusion Inpainting. For the first version 4 model checkpoints are released. Pretty impressive! Applications in educational or creative tools.

For more information, you can check out the official blog post. Since its public release the community has done an incredible job at working together to make the stable diffusion checkpoints faster , more memory efficient , and more performant.

Misuse and Malicious Use Using the model to generate content that is cruel to individuals is a misuse of this model. Training Procedure Stable Diffusion v is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. Also, we can see we only generate slightly more images per second 3. Model Description: This is a model that can be used to generate and modify images based on text prompts. During training, Images are encoded through an encoder, which turns images into latent representations. Impersonating individuals without their consent. If you want to contribute to this library, please check out our Contribution guide. Misuse and Malicious Use Using the model to generate content that is cruel to individuals is a misuse of this model. Resumed for another k steps on a x subset of our dataset. You can look out for issues you'd like to tackle to contribute to the library.

It agree, this excellent idea is necessary just by the way

What words...

Happens even more cheerfully :)