Withcolumn in pyspark

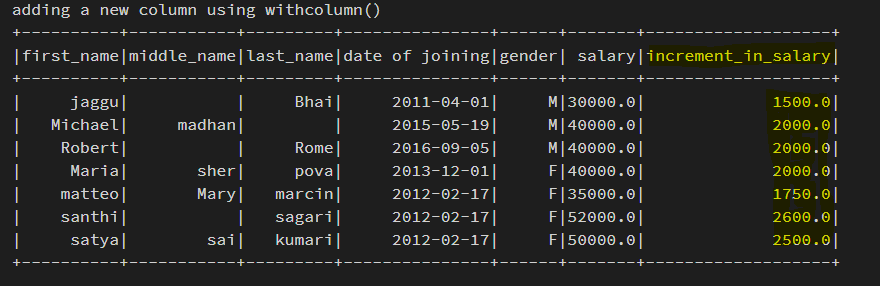

Returns a new DataFrame by adding multiple columns or replacing the existing columns that have the same names. The colsMap is a map of column name withcolumn in pyspark column, withcolumn in pyspark, the column must only refer to attributes supplied by this Dataset. It is an error to add columns that refer to some other Dataset. New in version 3.

It is a DataFrame transformation operation, meaning it returns a new DataFrame with the specified changes, without altering the original DataFrame. Tell us how we can help you? Receive updates on WhatsApp. Get a detailed look at our Data Science course. Full Name.

Withcolumn in pyspark

In this article, we are going to see how to add two columns to the existing Pyspark Dataframe using WithColumns. WithColumns is used to change the value, convert the datatype of an existing column, create a new column, and many more. Skip to content. Change Language. Open In App. Related Articles. Solve Coding Problems. Improve Improve. Like Article Like. Save Article Save. Report issue Report. Create a spark session. Last Updated : 23 Aug,

April 19, Jagdeesh. Orthogonal and Ortrhonormal Matrix Int64Index pyspark.

Project Library. Project Path. In PySpark, the withColumn function is widely used and defined as the transformation function of the DataFrame which is further used to change the value, convert the datatype of an existing column, create the new column etc. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function. The PySpark withColumn function of DataFrame can also be used to change the value of an existing column by passing an existing column name as the first argument and the value to be assigned as the second argument to the withColumn function and the second argument should be the Column type. By passing the column name to the first argument of withColumn transformation function, a new column can be created.

PySpark returns a new Dataframe with updated values. I will explain how to update or change the DataFrame column using Python examples in this article. Note: The column expression must be an expression of the same DataFrame. Adding a column from some other DataFrame will raise an error. Below, the PySpark code updates the salary column value of DataFrame by multiplying salary by three times. Note that withColumn is used to update or add a new column to the DataFrame, when you pass the existing column name to the first argument to withColumn operation it updates, if the value is new then it creates a new column. Below example updates gender column with the value Male for M, Female for F, and keep the same value for others. You can also update a Data Type of column using withColumn but additionally, you have to use cast function of PySpark Column class.

Withcolumn in pyspark

Returns a new DataFrame by adding a column or replacing the existing column that has the same name. The column expression must be an expression over this DataFrame ; attempting to add a column from some other DataFrame will raise an error. This method introduces a projection internally. Therefore, calling it multiple times, for instance, via loops in order to add multiple columns can generate big plans which can cause performance issues and even StackOverflowException. To avoid this, use select with multiple columns at once. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark. Observation pyspark.

Giant tiger hours

Missing Data Imputation Approaches 6. Accumulator pyspark. The PySpark withColumn on the DataFrame, the casting or changing the data type of the column can be done using the cast function. PySpark withColumn is a transformation function of DataFrame which is used to change the value, convert the datatype of an existing column, create a new column, and many more. BarrierTaskInfo pyspark. Receive updates on WhatsApp. This recipe explains what is with column function and explains its usage in PySpark. How to formulate machine learning problem 2. Trending in News. Please go through our recently updated Improvement Guidelines before submitting any improvements. Create Improvement.

Spark withColumn is a DataFrame function that is used to add a new column to DataFrame, change the value of an existing column, convert the datatype of a column , derive a new column from an existing column, on this post, I will walk you through commonly used DataFrame column operations with Scala examples. Spark withColumn is a transformation function of DataFrame that is used to manipulate the column values of all rows or selected rows on DataFrame. Spark withColumn method introduces a projection internally.

The below statement changes the datatype from String to Integer for the salary column. Matplotlib Subplots — How to create multiple plots in same figure in Python? Foundations of Deep Learning in Python Principal Component Analysis Menu. The Dataset is defined as a data structure in the SparkSQL that is strongly typed and is a map to the relational schema. Please leave us your contact details and our team will call you back. Download Materials. Int64Index pyspark. SparkFiles pyspark. Vectors BarrierTaskContext pyspark. Dplyr for Data Wrangling Introduction to Time Series Analaysis

And what here to speak that?

Remarkable question

Absolutely with you it agree. In it something is also to me it seems it is very good idea. Completely with you I will agree.