Torchaudio

Torchaudio manipulation and transformation for audio signal processing, powered by PyTorch.

Decoding and encoding media is highly elaborated process. Therefore, TorchAudio relies on third party libraries to perform these operations. These third party libraries are called backend , and currently TorchAudio integrates the following libraries. Please refer to Installation for how to enable backends. However, this approach does not allow applications to use different backends, and it is not well-suited for large codebases. For these reasons, in v2. If the specified backend is not available, the function call will fail.

Torchaudio

Development will continue under the roof of the mlverse organization, together with torch itself, torchvision , luz , and a number of extensions building on torch. The default backend is av , a fast and light-weight wrapper for Ffmpeg. As of this writing, an alternative is tuneR ; it may be requested via the option torchaudio. Note though that with tuneR , only wav and mp3 file extensions are supported. For torchaudio to be able to process the sound object, we need to convert it to a tensor. Please note that the torchaudio project is released with a Contributor Code of Conduct. By contributing to this project, you agree to abide by its terms. Skip to content. You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. You switched accounts on another tab or window. Dismiss alert. Notifications Fork 6 Star

To add background noise to audio data, you can add an audio Tensor and a noise Tensor, torchaudio. Contributors This backend torchaudio file-like objects.

Deep learning technologies have boosted audio processing capabilities significantly in recent years. Deep Learning has been used to develop many powerful tools and techniques, for example, automatic speech recognition systems that can transcribe spoken language into text; another use case is music generation. TorchAudio is a PyTorch package for audio data processing. It provides audio processing functions like loading, pre-processing, and saving audio files. This article will explore PyTorch's TorchAudio library to process audio files and extract features.

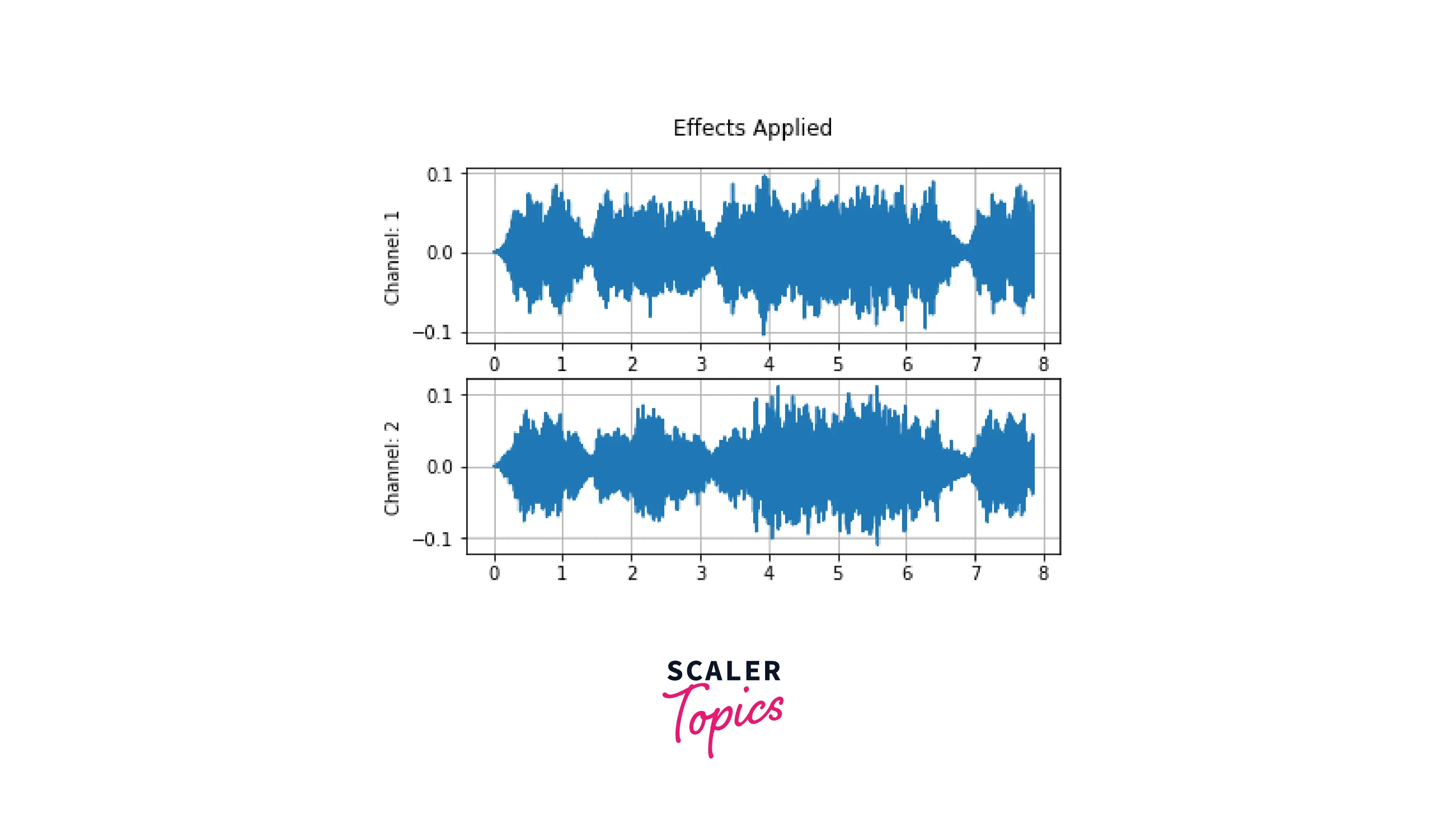

Torchaudio is a library for audio and signal processing with PyTorch. Learn how to stream audio and video from laptop webcam and perform audio-visual automatic speech recognition using Emformer-RNNT model. Forced alignment for multilingual data Topics: Forced-Alignment. StreamReader class. Apply effects and codecs to waveform Topics: Preprocessing. Learn how to apply effects and codecs to waveform using torchaudio.

Torchaudio

Released: Feb 22, View statistics for this project via Libraries. The aim of torchaudio is to apply PyTorch to the audio domain. By supporting PyTorch, torchaudio follows the same philosophy of providing strong GPU acceleration, having a focus on trainable features through the autograd system, and having consistent style tensor names and dimension names. Therefore, it is primarily a machine learning library and not a general signal processing library. The benefits of PyTorch can be seen in torchaudio through having all the computations be through PyTorch operations which makes it easy to use and feel like a natural extension.

Under the oak tree novel

This is a utility library that downloads and prepares public datasets. Mel-Frequency Cepstral Coefficients MFCC is a common feature representation of the spectral envelope of a sound, which describes how the power of the sound is distributed across different frequencies. Transformations torchaudio supports a growing list of transformations. Our model consists of 2 convolutional layers using a 5 x 5 5x5 5 x 5 kernel, a single dropout layer, and 2 linear layers. Torchaudio is a PyTorch library for processing audio signals. Many of these setup functions serve the same functions as the ones above. Now we create the dataloader which is responsible for feeding data to the model for training, this is done using the torch. To add background noise to audio data, you can add an audio Tensor and a noise Tensor. The lowpass filter width argument is used to determine the width of the filter to use in the interpolation window since the filter used for interpolation extends indefinitely. The y-axis represents the normalized amplitude, and the x-axis represents the time. Feature extraction is extracting relevant features or characteristics from raw data that can be used as inputs to a machine learning model. By clicking or navigating, you agree to allow our usage of cookies. Other pre-trained models that have different license are noted in documentation. You can see the difference in the waveform and spectrogram from the effects. The first thing we need for MFCC is getting the mel filterbanks.

The aim of torchaudio is to apply PyTorch to the audio domain.

Therefore, beginning with 2. Some effects are delay, allpass, bandpass, contrast, divide , etc. The specific examples we went over are adding sound effects, background noise, and room reverb. See the link for additional details. This enables you to artificially expand the size of the training set, which might be helpful when there is a shortage of training data. Next, we use librosa. Branches Tags. However, this approach does not allow applications to use different backends, and it is not well-suited for large codebases. The MelSpectrogram transformation takes the waveform of an audio sample as input and returns a tensor representing the mel spectrogram of the audio sample. TimeMasking : Apply masking to a spectrogram in the time domain. Our model consists of 2 convolutional layers using a 5 x 5 5x5 5 x 5 kernel, a single dropout layer, and 2 linear layers. Table of Contents.

Just that is necessary.