Text-generation-webui

Explore the top contributors showcasing the highest number of Text-generation-webui Generation Web UI AI technology page app submissions within our community, text-generation-webui.

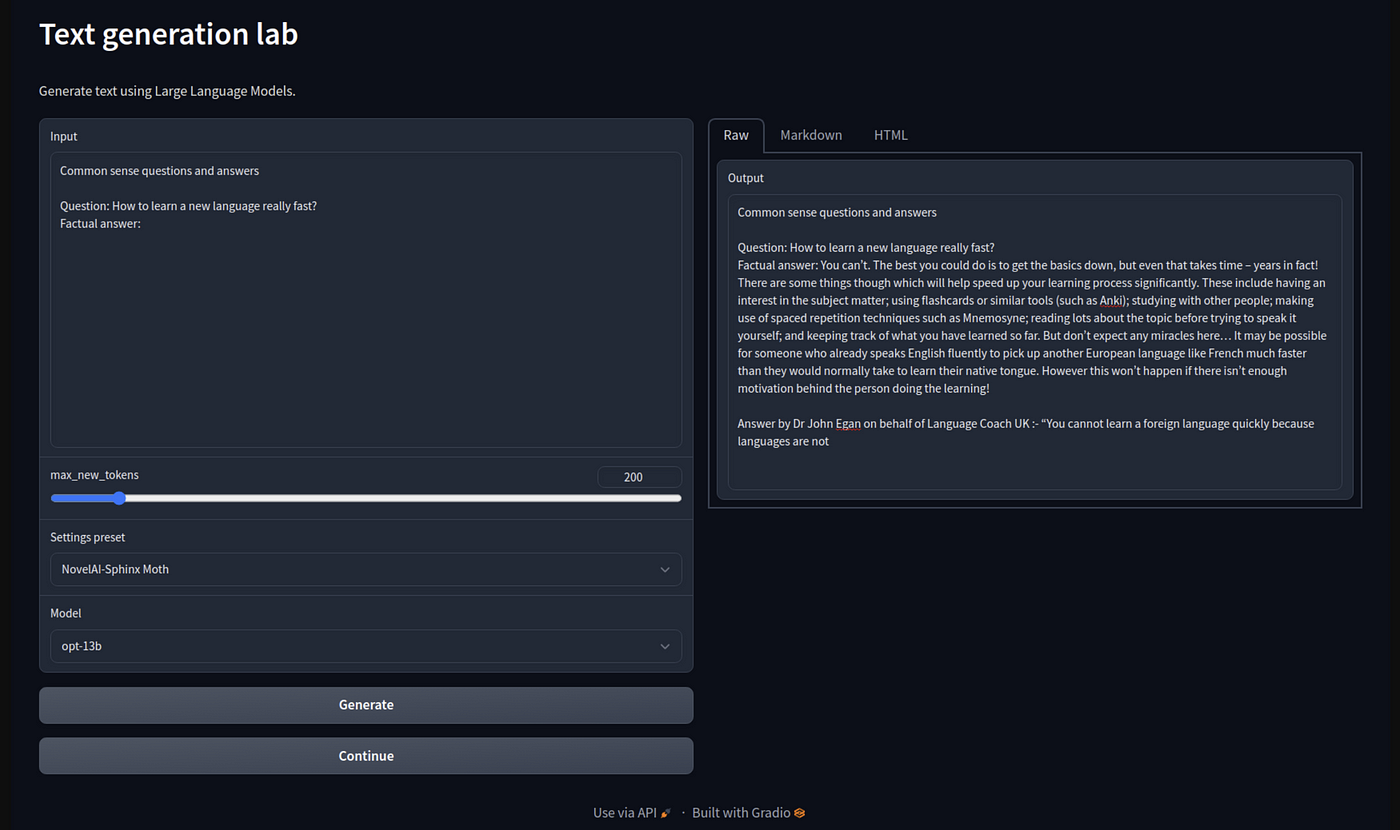

It offers many convenient features, such as managing multiple models and a variety of interaction modes. See this guide for installing on Mac. The GUI is like a middleman, in a good sense, who makes using the models a more pleasant experience. You can still run the model without a GPU card. The one-click installer is recommended for regular users.

Text-generation-webui

In case you need to reinstall the requirements, you can simply delete that folder and start the web UI again. The script accepts command-line flags. On Linux or WSL, it can be automatically installed with these two commands source :. If you need nvcc to compile some library manually, replace the command above with. Manually install llama-cpp-python using the appropriate command for your hardware: Installation from PyPI. To update, use these commands:. They are usually downloaded from Hugging Face. In both cases, you can use the "Model" tab of the UI to download the model from Hugging Face automatically. It is also possible to download it via the command-line with. If you would like to contribute to the project, check out the Contributing guidelines. In August , Andreessen Horowitz a16z provided a generous grant to encourage and support my independent work on this project. I am extremely grateful for their trust and recognition.

Branches Tags.

.

LLMs work by generating one token at a time. Given your prompt, the model calculates the probabilities for every possible next token. The actual token generation is done after that. These were obtained after a blind contest called "Preset Arena" where hundreds of people voted. The full results can be found here. For more information about the parameters, the transformers documentation is a good reference. Parameters that define the character that is used in the Chat tab when "chat" or "chat-instruct" are selected under "Mode".

Text-generation-webui

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. Describe: "There is no tracking information for the current branch.

How to unclog salivary duct

You switched accounts on another tab or window. Contributing Pull requests, suggestions, and issue reports are welcome. In text-generation-webui, navigate to the Model page. Google Colab notebook. With its multilingual competence, AI-powered assistance, and user-friendly deployment, it heralds a new era of effortless communication and interaction, catering to the evolving needs of a diverse global audience. Maximum cache capacity llama-cpp-python. Leave a Reply Cancel reply Your email address will not be published. Report repository. Its erratic outcomes are best suitable for this kind of task but still needs to be careful on its toxic nature. Save my name, email, and website in this browser for the next time I comment. Install the web UI. Download and install Visual Studio Build Tools.

Extensions are defined by files named script.

Installation There are different installation methods available, including one-click installers for Windows, Linux, and macOS, as well as manual installation using Conda. JARVIS navigates through a repository of specialized agents, each programmed to excel in specific tasks. Choose the model loader manually, otherwise, it will get autodetected. Try again. Introducing "Llamarizer," a dynamic text summarization tool fueled by the potent Llamab model crafted by Meta AI. There are different installation methods available, including one-click installers for Windows, Linux, and macOS, as well as manual installation using Conda. Updating the requirements. Manually install llama-cpp-python using the appropriate command for your hardware: Installation from PyPI. Andrew September 12, at am. Contributing Pull requests, suggestions, and issue reports are welcome. Pull requests, suggestions, and issue reports are welcome. Download a model. ANy suggestions? Vectara's GenAI platform plays a pivotal role, simplifying the complex aspects of document pre-processing, embedding models, and vector storage. Daniel August 9, at pm.

This phrase is simply matchless ;)

Yes, really. And I have faced it. We can communicate on this theme. Here or in PM.

Excuse for that I interfere � To me this situation is familiar. Is ready to help.