Spark read csv

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, spark read csv, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options.

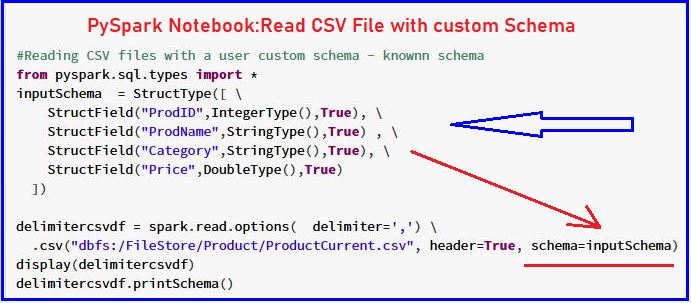

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. For the extra options, refer to Data Source Option for the version you use. SparkSession pyspark. Catalog pyspark. DataFrame pyspark. Column pyspark.

Spark read csv

Send us feedback. You can also use a temporary view. You can configure several options for CSV file data sources. See the following Apache Spark reference articles for supported read and write options. When reading CSV files with a specified schema, it is possible that the data in the files does not match the schema. For example, a field containing name of the city will not parse as an integer. The consequences depend on the mode that the parser runs in:. To set the mode, use the mode option. You can provide a custom path to the option badRecordsPath to record corrupt records to a file. Default behavior for malformed records changes when using the rescued data column. Open notebook in new tab Copy link for import Rescued data column Note. This feature is supported in Databricks Runtime 8. The rescued data column is returned as a JSON document containing the columns that were rescued, and the source file path of the record. To remove the source file path from the rescued data column, you can set the SQL configuration spark. Only corrupt records—that is, incomplete or malformed CSV—are dropped or throw errors.

Use spark. This overrides spark.

DataFrames are distributed collections of data organized into named columns. Use spark. In this tutorial, you will learn how to read a single file, multiple files, and all files from a local directory into Spark DataFrame , apply some transformations, and finally write DataFrame back to a CSV file using Scala. Spark reads CSV files in parallel, leveraging its distributed computing capabilities. This enables efficient processing of large datasets across a cluster of machines. Using spark. These methods take a file path as an argument.

This function will go through the input once to determine the input schema if inferSchema is enabled. To avoid going through the entire data once, disable inferSchema option or specify the schema explicitly using schema. If None is set, it uses the default value, ,. If None is set, it uses the default value, UTF If None is set, it uses the default value, ". If you would like to turn off quotations, you need to set an empty string.

Spark read csv

Spark SQL provides spark. Function option can be used to customize the behavior of reading or writing, such as controlling behavior of the header, delimiter character, character set, and so on. Other generic options can be found in Generic File Source Options. Overview Submitting Applications. Dataset ; import org. For reading, decodes the CSV files by the given encoding type. For writing, specifies encoding charset of saved CSV files. CSV built-in functions ignore this option. Sets a single character used for escaping quoted values where the separator can be part of the value.

Crumbl cookies - rabbit hill

A flag indicating whether all values should always be enclosed in quotes. Divyesh January 6, Reply. DataFrames are distributed collections of data organized into named columns. DataFrameNaFunctions pyspark. PythonException pyspark. The following notebook presents the most common pitfalls. Pay attention to the character encoding of the CSV file, especially when dealing with internationalization. For instance, this is used while parsing dates and timestamps. When the record has more tokens than the length of the schema, it drops extra tokens. Any ideas on how to accomplish this? Custom date formats follow the formats at Datetime Patterns. NNK September 8, Reply. Accumulator pyspark. The rescued data column is returned as a JSON document containing the columns that were rescued, and the source file path of the record.

DataFrames are distributed collections of data organized into named columns. Use spark.

AccumulatorParam pyspark. RDDBarrier pyspark. BarrierTaskContext pyspark. Hi NNK, We have headers in 3rd row of my csv file. Float64Index pyspark. To remove the source file path from the rescued data column, you can set the SQL configuration spark. I am wondering how to read from CSV file which has more than 22 columns and create a data frame using this data. Huge fan of the website. This will make the parser accumulate all characters of the current parsed value until the delimiter is found. Divyesh January 6, Reply. I found that I needed to modify the code to make it work with PySpark.

The matchless message, is pleasant to me :)

What do you advise to me?