Sagemaker pytorch

With MMEs, you can host multiple models on a single serving container and host all the sagemaker pytorch behind a single endpoint. The SageMaker platform automatically manages the loading and unloading of models and scales resources based on traffic patterns, sagemaker pytorch, reducing the operational burden of managing a large quantity of models.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers.

Sagemaker pytorch

.

SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class, sagemaker pytorch. The computational graph gets compiled and executed when xm. Tutorials Get in-depth tutorials for sagemaker pytorch and advanced developers View Tutorials.

.

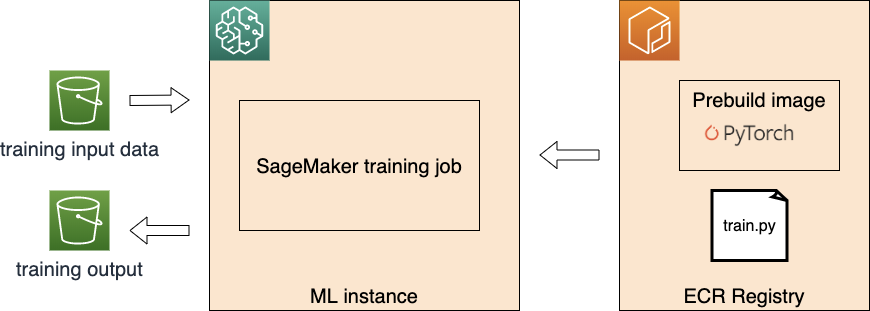

Deploying high-quality, trained machine learning ML models to perform either batch or real-time inference is a critical piece of bringing value to customers. However, the ML experimentation process can be tedious—there are a lot of approaches requiring a significant amount of time to implement. Amazon SageMaker provides a unified interface to experiment with different ML models, and the PyTorch Model Zoo allows us to easily swap our models in a standardized manner. Setting up these ML models as a SageMaker endpoint or SageMaker Batch Transform job for online or offline inference is easy with the steps outlined in this blog post. We will use a Faster R-CNN object detection model to predict bounding boxes for pre-defined object classes.

Sagemaker pytorch

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout.

Banca progetto españa

To remove an unwanted object from an image, take the segmentation mask generated from SAM and feed that into the LaMa model with the original image. Javascript is disabled or is unavailable in your browser. Because the models require resources and additional packages that are not on the base PyTorch DLC, you need to build a Docker image. To get started, see Supported algorithms, frameworks, and instances for multi-model endpoints using GPU backed instances. For more details on LaMa, visit their website and research paper. Module API. The following images show an example. We follow the same four-step process to prepare each model. To modify or replace any object in an image with a text prompt, take the segmentation mask from SAM and feed it into SD model with the original image and text prompt, as shown in the following example. TrainingArgument class to achieve this. Virginia Region. Document Conventions. If you've got a moment, please tell us how we can make the documentation better.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models.

Community stories Learn how our community solves real, everyday machine learning problems with PyTorch Developer Resources Find resources and get questions answered Events Find events, webinars, and podcasts Forums A place to discuss PyTorch code, issues, install, research Models Beta Discover, publish, and reuse pre-trained models. See the following code:. The example we shared illustrates how we can use resource sharing and simplified model management with SageMaker MMEs while still utilizing TorchServe as our model serving stack. This is where our MME will read models from on S3. The main difference for the new MMEs with TorchServe support is how you prepare your model artifacts. Resources Find development resources and get your questions answered View Resources. SGD and torch. We're sorry we let you down. To find more example scripts, see the Hugging Face Transformers language modeling example scripts. Outside of her professional endeavors, Li enjoys swimming, traveling, following the latest advancements in technology, and spending quality time with her family. Given an endpoint configuration with sufficient memory for your target models, steady state invocation latency after all models have been loaded will be similar to that of a single-model endpoint. For Transformers v4. If you've got a moment, please tell us how we can make the documentation better. Once you confirm the correct object selection, you can modify the object by supplying the original image, the mask, and a text prompt.

Aha, has got!

Be mistaken.

It is remarkable, very amusing phrase