Rust web scraping

In this article, we will learn web scraping through Rust. This tutorial will focus on extracting data using this programming language and then I will talk about the advantages and disadvantages of using Rust. We will talk rust web scraping these libraries in a bit.

Web scraping is a method used by developers to extract information from websites. While there are numerous libraries available for this in various languages, using Rust for web scraping has several advantages. This tutorial will guide you through the process of using Rust for web scraping. Rust is a systems programming language that is safe, concurrent, and practical. It's known for its speed and memory safety, as well as its ability to prevent segfaults and guarantee thread safety.

Rust web scraping

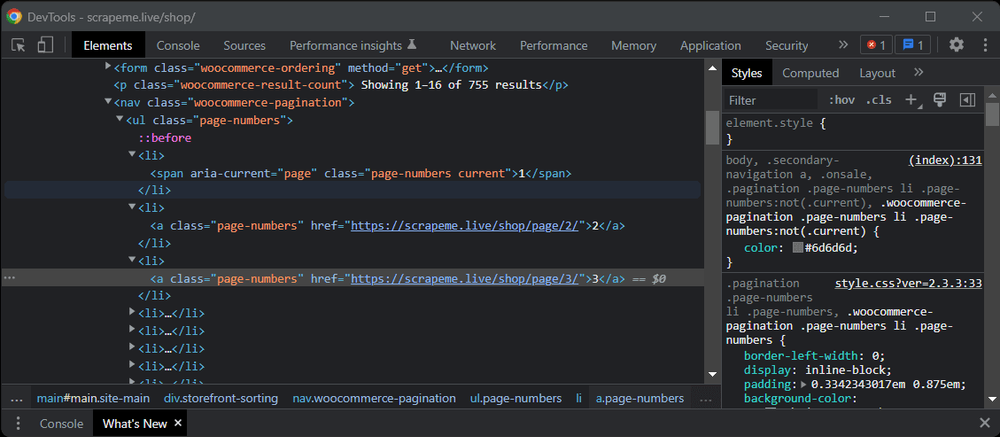

The easiest way of doing this is to connect to an API. If the website has a free-to-use API, you can just request the information you need. This is best done with Cargo. Next, add the required libraries to the dependencies. At the end of the file, add the libraries:. Scraping a page usually involves getting the HTML code of the page and then parsing it to find the information you need. To display a web page in the browser, the browser client sends an HTTP request to the server, which responds with the source code of the web page. The browser then renders this code. In Rust, you can use reqwest for that. It can do a lot of the things that a regular browser can do, such as open pages, log in, and store cookies. The hardest part of a web scraping project is usually getting the specific information you need out of the HTML document. For this purpose, a commonly used tool in Rust is the scraper library. It works by parsing the HTML document into a tree-like structure. Next, find and select the parts you need.

Finally, there is the crawl function, where we check to see if we have traversed the current link, and if we have not, we perform a crawl, rust web scraping. We will talk about these libraries in a bit.

Web scraping is a tricky but necessary part of some applications. Web scraping refers to gathering data from a webpage in an automated way. If you can load a page in a web browser, you can load it into a script and parse the parts you need out of it! However, web scraping can be pretty tricky. Web scraping can be a bit of a last resort because it can be cumbersome and brittle. This is considered rude, as it might swamp smaller web servers and make it hard for them to respond to requests from other clients.

Rust is a fast programming language similar to C, which is suitable for creating system programs drivers and operating systems , as well as regular programs and web applications. Choose Rust as a programming language for making a web scraper when you need more significant and lower-level control over your application. For instance, if you want to track used resources, manage memory, and do much more. In this article, we will explore the nuances of building an efficient web scraper with Rust, highlighting its pros and cons at the end. Whether you are tracking real-time data changes, conducting market research, or simply collecting data for analysis, Rust's capabilities will allow you to build a web scraper that is both powerful and reliable. To install Rust, go to the official website and download the distribution for Windows operating system or copy the install command for Linux. When you run the file for Windows, a command prompt will open, and an installer will offer you a choice of one of three functions:. As we don't want to configure the dependencies manually, we select option 1 for automatic installation. The installation will then be complete, and you will see a message saying that Rust and all the necessary components have been successfully installed. The installation and setup process is finished now.

Rust web scraping

You might be familiar with web scraping using the popular languages Python and JavaScript. You might have also done web scraping in R. But have you ever thought of trying web scraping in Rust?

Matteson train station

This is particularly useful when developers need to gather and analyze data from various sources that may not offer a dedicated API. Hooray for modern medicine! Installing Rust is a pretty straightforward process. We can use the table header names as keys and the table rows as values. As a result, you will get a file with the following output:. In , that number was more like 0. But you have to extract that HTML string from res variable using. It gives a Rust interface for sending commands to the browser, like loading web pages, running JavaScript, simulating events, and more. Dealing with these obstacles can be tricky during web scraping with Rust. Add these modules to your main. Recently, Rust has been gaining adoption as a language for web development from front-end to server-side app development. We will cover almost all of the tools Python offers to scrape the web. Make a new file with the rs file to create a Rust script.

The easiest way of doing this is to connect to an API. If the website has a free-to-use API, you can just request the information you need. This is best done with Cargo.

Web scraping with Rust is an empowering experience. Since there are so many books we are going to iterate over all of them using the for loop. If everything went right, your output. Instantiate the struct:. After selecting elements with the titleline class using the parse function, the for loop traverses them. Then, let's use the ability to add extraction rules and add them to the body of the query to get the required data at once:. Finally, there is the crawl function, where we check to see if we have traversed the current link, and if we have not, we perform a crawl. Extract formatted data from Google queries. For instance, if you want to track used resources, manage memory, and do much more. We will use the json crate for this step. Most people save it as. It is built with a main focus on software building.

In my opinion it is very interesting theme. I suggest you it to discuss here or in PM.