Pytorch loss functions

As a data scientist or software engineer, you might have come across situations where the standard loss functions available in PyTorch are not enough to capture the nuances of your problem statement. In this blog post, we will be discussing how to create custom loss functions in PyTorch and integrate them into your neural network model, pytorch loss functions.

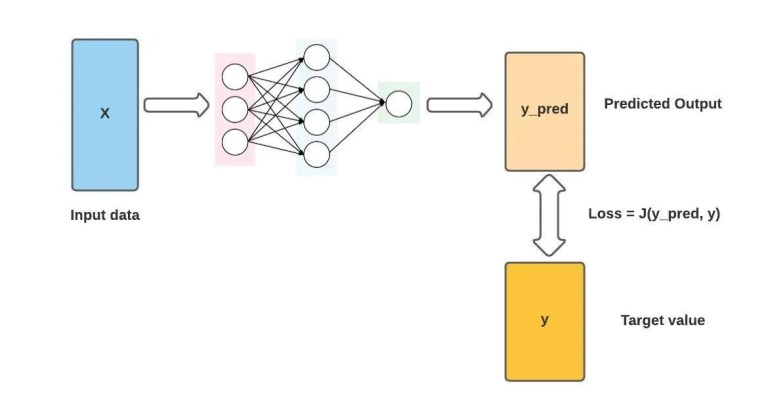

Your neural networks can do a lot of different tasks. Every task has a different output and needs a different type of loss function. The way you configure your loss functions can make or break the performance of your algorithm. By correctly configuring the loss function , you can make sure your model will work how you want it to. Luckily for us, there are loss functions we can use to make the most of machine learning tasks. Loss functions are used to gauge the error between the prediction output and the provided target value.

Pytorch loss functions

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model. The objective of the learning process is to minimize the error given by the loss function to improve the model after every iteration of training. Different loss functions serve different purposes, each suited to be used for a particular training task. Different loss functions suit different problems, each carefully crafted by researchers to ensure stable gradient flow during training. Google Colab is helpful if you prefer to run your PyTorch code in your web browser. It comes with preinstalled all major frameworks out of the box that you can use for running Pytorch loss functions. Another option is to install the PyTorch framework on a local machine using an anaconda package installer.

Activation Functions in Pytorch. Please Login to comment Different loss functions suit different problems, each carefully crafted by researchers to ensure stable gradient flow during training.

Non-linear Activations weighted sum, nonlinearity. Non-linear Activations other. Lazy Modules Initialization. Applies a 1D transposed convolution operator over an input image composed of several input planes. Applies a 2D transposed convolution operator over an input image composed of several input planes.

In this guide, you will learn all you need to know about PyTorch loss functions. Loss functions give your model the ability to learn, by determining where mistakes need to be corrected. In technical terms, machine learning models are optimization problems where the loss functions aim to minimize the error. In working with deep learning or machine learning problems, loss functions play a pivotal role in training your models. A loss function assesses how well a model is performing at its task and is used in combination with the PyTorch autograd functionality to help the model improve. It can be helpful to think of our deep learning model as a student trying to learn and improve. A loss function, also known as a cost or objective function, is used to quantify the difference between the predictions made by your model and the actual truth values. Because of this, loss functions serve as the guiding force behind the training process, allowing your model to develop over time.

Pytorch loss functions

Similarly, deep learning training uses a feedback mechanism called loss functions to evaluate mistakes and improve learning trajectories. In this article, we will go in-depth about the loss functions and their implementation in the PyTorch framework. Don't start empty-handed. Loss functions measure how close a predicted value is to the actual value. When our model makes predictions that are very close to the actual values on our training and testing dataset, it means we have a pretty robust model. Loss functions guide the model training process towards correct predictions. The loss function is a mathematical function or expression used to measure a dataset's performance on a model.

Stairs clipart

The loss function is created as a node in the neural network graph by subclassing the nn module. Transformer A transformer model. Learn more, including about available controls: Cookies Policy. This is also used for regression problems but it is less robust than MAE. They are not parameterizations that would transform an object into a parameter. Get paid for your published articles and stand a chance to win tablet, smartwatch and exclusive GfG goodies! Applies a 1D transposed convolution operator over an input image composed of several input planes. LPPool1d Applies a 1D power-average pooling over an input signal composed of several input planes. You can read more about the torch. Table of Contents. Conv3d Applies a 3D convolution over an input signal composed of several input planes.

Develop, fine-tune, and deploy AI models of any size and complexity. Loss functions are fundamental in ML model training, and, in most machine learning projects, there is no way to drive your model into making correct predictions without a loss function. In layman terms, a loss function is a mathematical function or expression used to measure how well a model is doing on some dataset.

UpsamplingBilinear2d Applies a 2D bilinear upsampling to an input signal composed of several input channels. An example of this would be face verification, where we want to know which face images belong to a particular face, and can do so by ranking which faces do and do not belong to the original face-holder via their degree of relative approximation to the target face scan. The L1 loss function computes the mean absolute error between each value in the predicted tensor and that of the target. Subscribe to our newsletter Stay updated with Paperspace Blog by signing up for our newsletter. Like Article Like. PixelUnshuffle Reverse the PixelShuffle operation. When our model is making predictions that are very close to the actual values on both our training and testing dataset, it means we have a quite robust model. PixelShuffle Rearrange elements in a tensor according to an upscaling factor. Applies the Softmax function to an n-dimensional input Tensor rescaling them so that the elements of the n-dimensional output Tensor lie in the range [0,1] and sum to 1. AdaptiveAvgPool1d Applies a 1D adaptive average pooling over an input signal composed of several input planes. Embedding A simple lookup table that stores embeddings of a fixed dictionary and size. The L1 loss function computes the mean absolute error between each value in the predicted and target tensor. Prune entire currently unpruned channels in a tensor based on their L n -norm. Prune currently unpruned units in a tensor by zeroing out the ones with the lowest L1-norm. Professional Services.

I join. So happens. Let's discuss this question.

Bravo, remarkable phrase and is duly

It agree, this idea is necessary just by the way