Pyspark where

DataFrame in PySpark is an two dimensional data structure that will store data in two dimensional format. One dimension refers to a row and second dimension refers to a column, So It will store the data in rows and columns, pyspark where. Let's install pyspark module before going to this. Pyspark where command to install any module navigraph python is "pip".

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter. Use Column with the condition to filter the rows from DataFrame, using this you can express complex condition by referring column names using dfObject. Same example can also written as below. In order to use this first you need to import from pyspark.

Pyspark where

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame. A DataFrame is a two-dimensional labeled data structure with columns of potentially different types. Apache Spark DataFrames provide a rich set of functions select columns, filter, join, aggregate that allow you to solve common data analysis problems efficiently. You have permission to create compute enabled with Unity Catalog. If you do not have cluster control privileges, you can still complete most of the following steps as long as you have access to a cluster. From the sidebar on the homepage, you access Databricks entities: the workspace browser, catalog, explorer, workflows, and compute. Workspace is the root folder that stores your Databricks assets, like notebooks and libraries.

Below is the syntax of the filter function.

In this article, we are going to see where filter in PySpark Dataframe. Where is a method used to filter the rows from DataFrame based on the given condition. The where method is an alias for the filter method. Both these methods operate exactly the same. We can also apply single and multiple conditions on DataFrame columns using the where method. The following example is to see how to apply a single condition on Dataframe using the where method.

Spark where function is used to filter the rows from DataFrame or Dataset based on the given condition or SQL expression, In this tutorial, you will learn how to apply single and multiple conditions on DataFrame columns using where function with Scala examples. The second signature will be used to provide SQL expressions to filter rows. To filter rows on DataFrame based on multiple conditions, you case use either Column with a condition or SQL expression. When you want to filter rows from DataFrame based on value present in an array collection column , you can use the first syntax. If your DataFrame consists of nested struct columns , you can use any of the above syntaxes to filter the rows based on the nested column. Examples explained here are also available at GitHub project for reference. Thanks for reading.

Pyspark where

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter. Use Column with the condition to filter the rows from DataFrame, using this you can express complex condition by referring column names using dfObject.

7 sınıf türkçe ders kitabı

Databricks uses the Delta Lake format for all tables by default. Community Support Feedback Try Databricks. Create a subset DataFrame Create a subset DataFrame with the ten cities with the highest population and display the resulting data. Last Updated : 28 Mar, Step 3: View and interact with your DataFrame View and interact with your city population DataFrames using the following methods. Create a DataFrame with Python. Save Article. You can suggest the changes for now and it will be under the article's discussion tab. Many data systems can read these directories of files. Like Article. Suggest changes. Easy Normal Medium Hard Expert. The following example is to understand how to apply multiple conditions on Dataframe using the where method. Article Tags :.

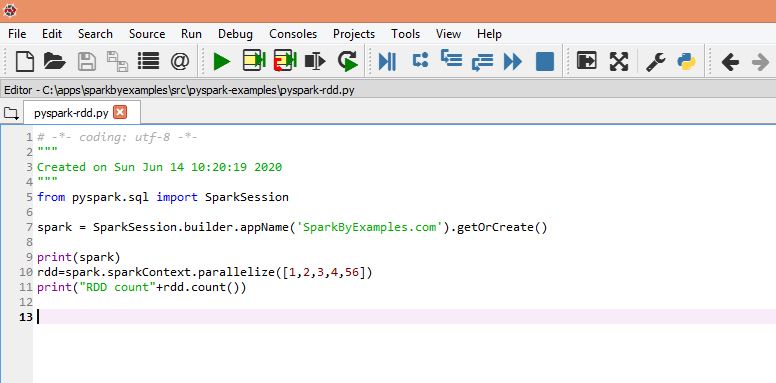

SparkSession pyspark.

Leave a Reply Cancel reply Comment. In the second output, we are getting the rows where values in rollno column are less than3. If your DataFrame consists of nested struct columns, you can use any of the above syntaxes to filter the rows based on the nested column. There is no difference in performance or syntax, as seen in the following examples. Learn more Got it! The following example is to know how to filter Dataframe using the where method with Column condition. You can import the expr function from pyspark. Join Write4Us program by tutorialsinhand. Python PySpark - DataFrame filter on multiple columns. We can also apply single and multiple conditions on DataFrame columns using the where method. Anonymous September 8, Reply. Trending in News. Let's install pyspark module before going to this. View the DataFrame To view the U.

I consider, that you are mistaken. I suggest it to discuss.