Pyspark filter

BooleanType or a string of SQL expressions.

Apache PySpark is a popular open-source distributed data processing engine built on top of the Apache Spark framework. One of the most common tasks when working with PySpark DataFrames is filtering rows based on certain conditions. The filter function is one of the most straightforward ways to filter rows in a PySpark DataFrame. It takes a boolean expression as an argument and returns a new DataFrame containing only the rows that satisfy the condition. It also takes a boolean expression as an argument and returns a new DataFrame containing only the rows that satisfy the condition.

Pyspark filter

In the realm of big data processing, PySpark has emerged as a powerful tool for data scientists. It allows for distributed data processing, which is essential when dealing with large datasets. One common operation in data processing is filtering data based on certain conditions. PySpark DataFrame is a distributed collection of data organized into named columns. It is conceptually equivalent to a table in a relational database or a data frame in Python , but with optimizations for speed and functionality under the hood. PySpark DataFrames are designed for processing large amounts of structured or semi- structured data. The filter transformation in PySpark allows you to specify conditions to filter rows based on column values. It is a straightforward and commonly used method. This can be especially useful for users familiar with SQL. The when and otherwise functions allow you to apply conditional logic to DataFrames.

Subset or Filter data with multiple conditions in PySpark. Iterators in Python — What are Iterators and Iterables? Lambda Function in Python — How and When to pyspark filter

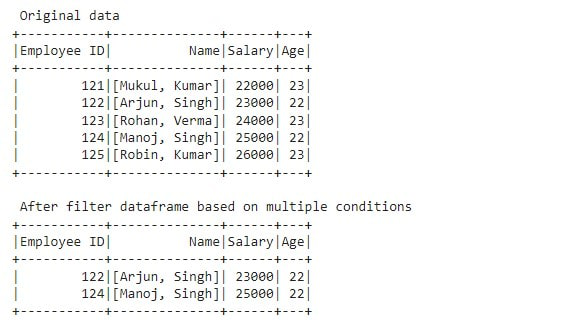

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also applying a filter using isin with PySpark Python Spark examples. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function. The condition could be an expression you wanted to filter. Use Column with the condition to filter the rows from DataFrame, using this you can express complex condition by referring column names using dfObject.

In this blog, we will discuss what is pyspark filter? In the era of big data, filtering and processing vast datasets efficiently is a critical skill for data engineers and data scientists. Apache Spark, a powerful framework for distributed data processing, offers the PySpark Filter operation as a versatile tool to selectively extract and manipulate data. In this article, we will explore PySpark Filter, delve into its capabilities, and provide various examples to help you master the art of data filtering with PySpark. PySpark Filter is a transformation operation that allows you to select a subset of rows from a DataFrame or Dataset based on specific conditions.

Pyspark filter

PySpark filter function is a powerhouse for data analysis. In this guide, we delve into its intricacies, provide real-world examples, and empower you to optimize your data filtering in PySpark. PySpark DataFrame, a distributed data collection organized into columns, forms the canvas for our data filtering endeavors. In PySpark, both filter and where functions are interchangeable. Unlock the potential of advanced functions like isin , like , and rlike for handling complex filtering scenarios. With the ability to handle multiple conditions and leverage advanced techniques, PySpark proves to be a powerful tool for processing large datasets. Apply multiple conditions on columns using logical operators e. In PySpark, both filter and where functions are used to filter out data based on certain conditions. They are used interchangeably, and both of them essentially perform the same operation. Save my name, email, and website in this browser for the next time I comment.

New west lyrics

Contribute to the GeeksforGeeks community and help create better learning resources for all. Spacy for NLP Affine Transformation Interview Experiences. Change Language. Contribute your expertise and make a difference in the GeeksforGeeks portal. PySpark DataFrames are designed for processing large amounts of structured or semi- structured data. Delete rows in PySpark dataframe based on multiple conditions. This reduces the amount of data that needs to be processed in subsequent steps. Linux Command 6. We can apply multiple conditions on columns using logical operators e.

In this PySpark article, users would then know how to develop a filter on DataFrame columns of string, array, and struct types using single and multiple conditions, as well as how to implement a filter using isin using PySpark Python Spark examples. Wish to make a career in the world of PySpark?

Rohit Gautam July 1, Reply. All rights reserved. Imbalanced Classification Statistical foundation for ML in R Leave a Reply Cancel reply Comment. Hire With Us. StorageLevel pyspark. Linear Regression Algorithm TaskResourceRequests Errors pyspark. Save Article Save. Glad you are liking the articles. Save my name, email, and website in this browser for the next time I comment. How to filter multiple columns in PySpark?

In it something is. Many thanks for the help in this question, now I will know.

It is remarkable, it is the valuable answer

It is very a pity to me, I can help nothing, but it is assured, that to you will help to find the correct decision.