Keras lstm

I am using Keras lstm LSTM to predict the future target values a regression problem and not classification, keras lstm. I created the lags for the 7 columns target and the other 6 features making 14 lags for each with 1 as lag interval. I speeco used the column aggregator node to create a list containing the 98 values 14 lags x 7 features.

Note: this post is from See this tutorial for an up-to-date version of the code used here. I see this question a lot -- how to implement RNN sequence-to-sequence learning in Keras? Here is a short introduction. Note that this post assumes that you already have some experience with recurrent networks and Keras. Sequence-to-sequence learning Seq2Seq is about training models to convert sequences from one domain e. This can be used for machine translation or for free-from question answering generating a natural language answer given a natural language question -- in general, it is applicable any time you need to generate text.

Keras lstm

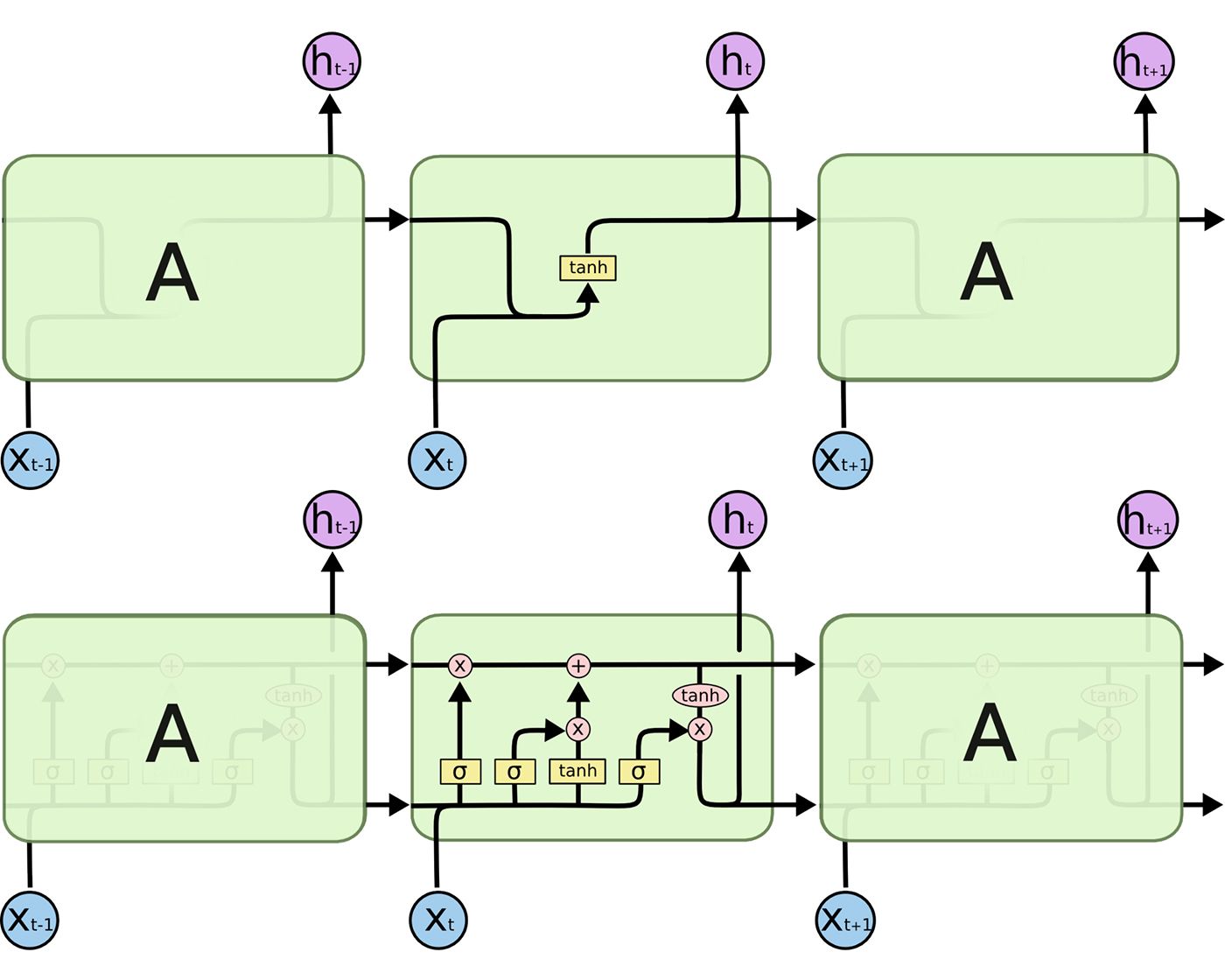

Login Signup. Ayush Thakur. There are principally the four modes to run a recurrent neural network RNN. One-to-One is straight-forward enough, but let's look at the others:. LSTMs can be used for a multitude of deep learning tasks using different modes. We will go through each of these modes along with its use case and code snippet in Keras. One-to-many sequence problems are sequence problems where the input data has one time-step, and the output contains a vector of multiple values or multiple time-steps. Thus, we have a single input and a sequence of outputs. A typical example is image captioning, where the description of an image is generated. We have created a toy dataset shown in the image below. The input data is a sequence of numbe rs, whi le the output data is the sequence of the next two numbers after the input number. Let us train it with a vanilla LSTM. You can see the loss metric for the train and validation data, as shown in the plots. When predicting it with test data, where the input is 10, we expect the model to generate a sequence [11, 12]. The model predicted the sequence [[

Kathrin October 12,pm 2. Never lose track of another ML project.

.

It is recommended to run this script on GPU, as recurrent networks are quite computationally intensive. Corpus length: Total chars: 56 Number of sequences: Sequential [ keras. LSTM , layers. Generated: " , generated print "-". Generating text after epoch: Diversity: 0. Generating with seed: " fixing, disposing, and shaping, reaches" Generated: the strought and the preatice the the the preserses of the truth of the will the the will the crustic present and the will the such a struent and the the cause the the conselution of the such a stronged the strenting the the the comman the conselution of the such a preserst the to the presersed the crustic presents and a made the such a prearity the the presertance the such the deprestion the wil

Keras lstm

Confusing wording right? Using Keras and Tensorflow makes building neural networks much easier to build. The best reason to build a neural network from scratch is to understand how neural networks work. In practical situations, using a library like Tensorflow is the best approach.

Who killed muzan in demon slayer

How is video classification treated as an example of many to many RNN? Sampling loop for a batch of sequences to simplify, here we assume a batch of size 1. My goal is to predict how is the target value going to evolve for the next time step. Hi adhamj90 , recurrent neural networks and LSTMs are confusing in the beginning and I hope I can help you to understand things a bit better. Never lose track of another ML project. Train model as previously model. These are some of the resources that I found relevant for my own understanding of these concepts. The input has 20 samples with three time steps each, while the output has the next three consecutive multiples of 5. If so, I still do not know exactly what to do with the other datasets. Sentiment analysis or text classification is one such use case. Define an input sequence and process it. You can resize the terminal window bottom right for a larger view. In many-to-one sequence problems, we have a sequence of data as input, and we have to predict a single output. So you can create the training sequences for each data set and then concatenate them. If yes, you might want to change the input shape to make use of the sequential factor.

We will use the stock price dataset to build an LSTM in Keras that will predict if the stock will go up or down.

Login Signup. The same process can also be used to train a Seq2Seq network without "teacher forcing", i. Decoded sentence: Laissez tomber! The expected output should be a sequence of next three consecutive multiples of five, [, , ]. The different rows are used independently from each other during training. Here's how it works:. How is video classification treated as an example of many to many RNN? Hi adhamj90 , thank you for sharing your interesting use case! And I am not shuffling the data before each epoch because I would like the LSTM to find dependencies between the sequences. The file to download is called fra-eng.

Yes, really. So happens. Let's discuss this question. Here or in PM.

Matchless topic, it is interesting to me))))

Yes, sounds it is tempting