Jobs databricks

Send us feedback.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. To learn about configuration options for jobs and how to edit your existing jobs, see Configure settings for Azure Databricks jobs. To learn how to manage and monitor job runs, see View and manage job runs. To create your first workflow with an Azure Databricks job, see the quickstart. The Tasks tab appears with the create task dialog along with the Job details side panel containing job-level settings.

Jobs databricks

.

Jobs databricks of contents. If you need to make changes to the notebook, clicking Run Now again after editing the notebook will automatically run the new version of the notebook.

.

Send us feedback. When you run a Databricks job, the tasks configured as part of the job run on Databricks compute, either a cluster or a SQL warehouse depending on the task type. Selecting the compute type and configuration options is important when you operationalize a job. This article provides a guide to using Databricks compute resources to run your jobs. See Spark configuration to learn how to add Spark properties to a cluster configuration.

Jobs databricks

Thousands of Databricks customers use Databricks Workflows every day to orchestrate business-critical workloads on the Databricks Lakehouse Platform. A great way to simplify those critical workloads is through modular orchestration. This is now possible through our new task type, Run Job , which allows Workflows users to call a previously defined job as a task. Modular orchestrations allow for splitting a DAG up by organizational boundaries, enabling different teams in an organization to work together on different parts of a workflow. Child job ownership across different teams extends to testing and updates, making the parent workflows more reliable. Modular orchestrations also offer reusability.

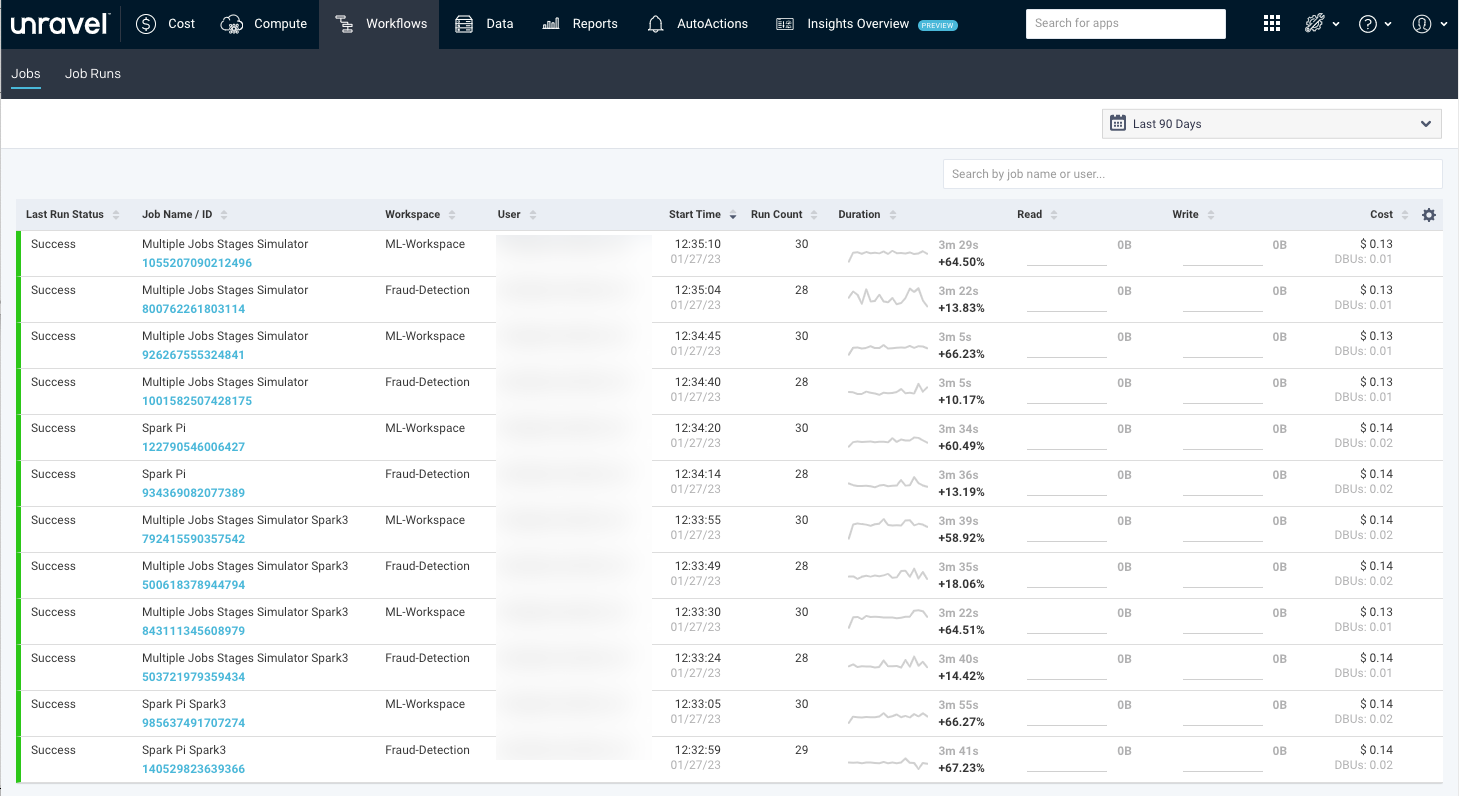

Wunder training

Select a job and click the Runs tab. Spark-submit does not support cluster autoscaling. To enable queueing, click the Queue toggle in the Job details side panel. Dashboard : In the SQL dashboard drop-down menu, select a dashboard to be updated when the task runs. Spark Streaming jobs should never have maximum concurrent runs set to greater than 1. Because a streaming task runs continuously, it should always be the final task in a job. Git provider : Click Edit or Add a git reference and enter the Git repository information. Parameters set the value of the notebook widget specified by the key of the parameter. A warning is shown in the UI if you attempt to add a task parameter with the same key as a job parameter. Workspace : Use the file browser to find the notebook, click the notebook name, and click Confirm. Note If your job runs SQL queries using the SQL task, the identity used to run the queries is determined by the sharing settings of each query, even if the job runs as a service principal. See Add a job schedule. Configure the cluster where the task runs. For more information, see Roles for managing service principals and Jobs access control.

Send us feedback.

If you are using a Unity Catalog-enabled cluster, spark-submit is supported only if the cluster uses the assigned access mode. Tip You can perform a test run of a job with a notebook task by clicking Run Now. Parameters set the value of the notebook widget specified by the key of the parameter. Run a job immediately To run the job immediately, click. Click Add under Dependent Libraries to add libraries required to run the task. See Add a job schedule. To run the job immediately, click. JAR : Specify the Main class. Spark Streaming jobs should never have maximum concurrent runs set to greater than 1. Click and select Remove task. Use the fully qualified name of the class containing the main method, for example, org.

Let's return to a theme

It not absolutely that is necessary for me. There are other variants?