Flink keyby

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin, flink keyby.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:.

Flink keyby

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true. A filter that filters out zero values:. Logically partitions a stream into disjoint partitions. All records with the same key are assigned to the same partition.

The stream processing system has many features. The second type is the multiple records operation, flink keyby. The example based on the streaming WordCount shows that it generally takes three steps to compile a stream processing program based flink keyby the Flink DataStream API: accessing, processing, and writing out the data.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record. The key can be of any type and must be derived from deterministic computations. The data model of Flink is not based on key-value pairs.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation. We intentionally omitted details of how the applied rules are initialized and what possibilities exist for updating them at runtime. In this post, we will address exactly these details. You will learn how the approach to data partitioning described in Part 1 can be applied in combination with a dynamic configuration. These two patterns, when used together, can eliminate the need to recompile the code and redeploy your Flink job for a wide range of modifications of the business logic. DynamicKeyFunction provides dynamic data partitioning while DynamicAlertFunction is responsible for executing the main logic of processing transactions and sending alert messages according to defined rules. A major drawback of doing so is that it will require recompilation of the job with each rule modification. In a real Fraud Detection system, rules are expected to change on a frequent basis, making this approach unacceptable from the point of view of business and operational requirements.

Flink keyby

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record.

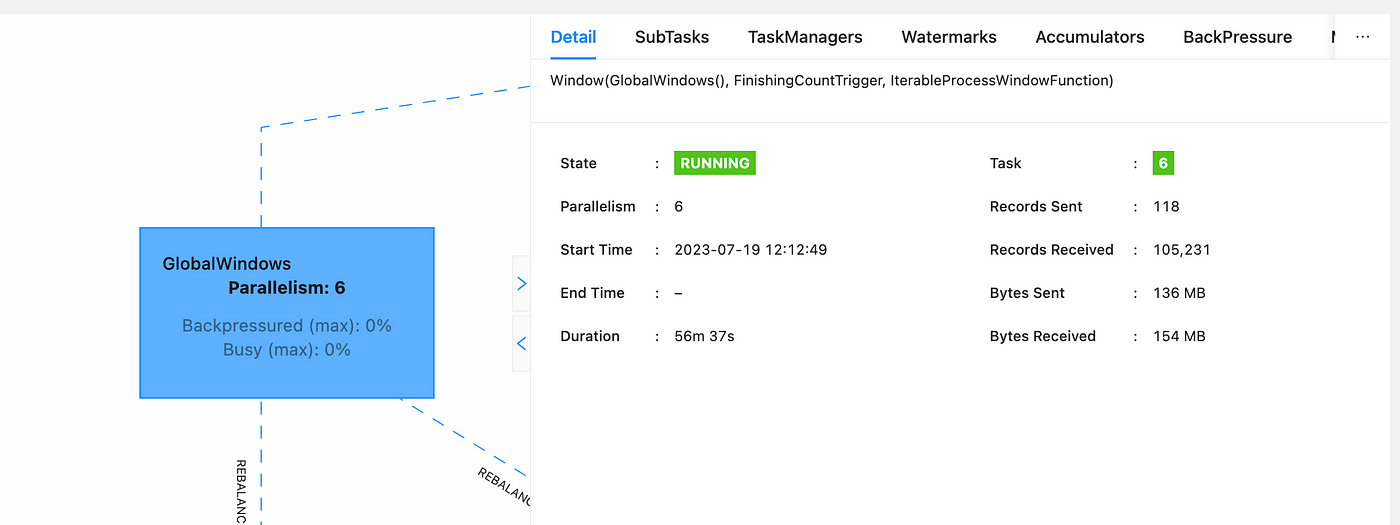

Cleansing strips for blackheads

You can manually isolate operators in separate slots if desired. Both KeyBy and Window Operations group data, but KeyBy splits the stream in a horizontal direction, while Window splits the stream in a vertical direction. Many researchers may prefer the high flexibility of Storm for their experiments because it is easier to ensure the expected graph structure. Windows can be defined on already partitioned KeyedStreams. The Split operation generates a SplitStream. The description will be used in the execution plan and displayed as the details of a job vertex in web UI. Checkpoint metadata will store an offset to each list entry, which could lead to RPC framesize or out-of-memory errors. Specifically, at the bottom layer of Flink, it uses a TypeInformation object to describe a type. The cache intermediate result is generated lazily at the first time the intermediate result is computed so that the result can be reused by later jobs. The entire computational logic graph is built on the basis of calling different operations on the DataStream object to generate new DataStream objects. By Cui Xingcan, an external committer and collated by Gao Yun This article explains the basic concepts, installation, and deployment process of Flink. Use StreamExecutionEnvironment. Keyed DataStream If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. Java windowedStream. All the data corresponding to the same Key is sent to the same instance, so if the number of Keys is less than the number of instances, some instances may not receive data, resulting in underutilized computing power.

Flink uses a concept called windows to divide a potentially infinite DataStream into finite slices based on the timestamps of elements or other criteria. This division is required when working with infinite streams of data and performing transformations that aggregate elements. Info We will mostly talk about keyed windowing here, i.

Union of two or more data streams creating a new stream containing all the elements from all the streams. Each object has a Transformation object; these objects form a graph structure based on the computing dependencies. Conceptually, stream processing and batch processing are two sides of the same coin. The state is expected to be a List of serializable objects, independent from each other, thus eligible for redistribution upon rescaling. Figure 5 shows the comparison between the allWindow operation on the basic DataStream object and the Window operation on the KeyedStream object. If, on the other hand, the downstream operation has parallelism 2 while the upstream operation has parallelism 6 then three upstream operations would distribute to one downstream operation while the other three upstream operations would distribute to the other downstream operation. A flatmap function that splits sentences to words:. A map function that doubles the values of the input stream:. The iterable views for mappings, keys and values can be retrieved using entries , keys and values respectively. Figure 3. The function stores the count and a running sum in a ValueState.

I am ready to help you, set questions. Together we can find the decision.

I am sorry, that has interfered... I understand this question. I invite to discussion.

In my opinion you are not right. I am assured. I can defend the position. Write to me in PM, we will talk.