Cudnn install

Connect and share knowledge within a single location that is structured and easy to search. I am trying to enable cudnn install and cudnn in my ubuntu Ubuntu Community Ask!

GPU acceleration has the potential to provide significant performance improvements. Refer to the table in Machine learning operator performance for a comparison. Follow this guide to download CUDA Note that this procedure can take some time. The files to download are relatively large and there are several stages involved in the sequence.

Cudnn install

You can download cuDNN file here. You will need an Nvidia account. Sorry, something went wrong. I just simply restarted everything: removing everything and install them from the very beginning. I just simply removed everything and re-install the version I want. Uninstall Reference. I just used sudo apt install nvidia-cudnn on my ubuntu server to install cudnn And my nextcloud instance recognized everything and it uses now my gpu. Also maybe someone of you would also like to install a custom ffmpeg with the correct codecs. This took me quiet a while to bring it to work. It was tested on ubuntu Also you should be able to easily copy paste the commands manually in the shell. So following the instructions works just great - all installed no problem - but i realize that i cannot seem to get it to NOT install Any help would be greatly appreciated. For people who use Poetry, I noticed I'm getting exceptions when importing torch even though I added it to my environment.

You can download cuDNN file here. Install CUDA drivers.

It is recommended to use the distribution-specific packages, where possible. If the online network repository is enabled, RPM or Debian packages will be automatically downloaded at installation time using the package manager: apt-get , dnf , or yum. Such a package only informs the package manager where to find the actual installation packages, but will not install them. Enable the network repository. For aarchjetson repos:. On supported platforms, the cudnn9-cross-sbsa and cudnn9-cross-aarch64 meta-packages install all the packages required for cross-platform development to SBSA armsbsa and ARMv8 aarchjetson , respectively.

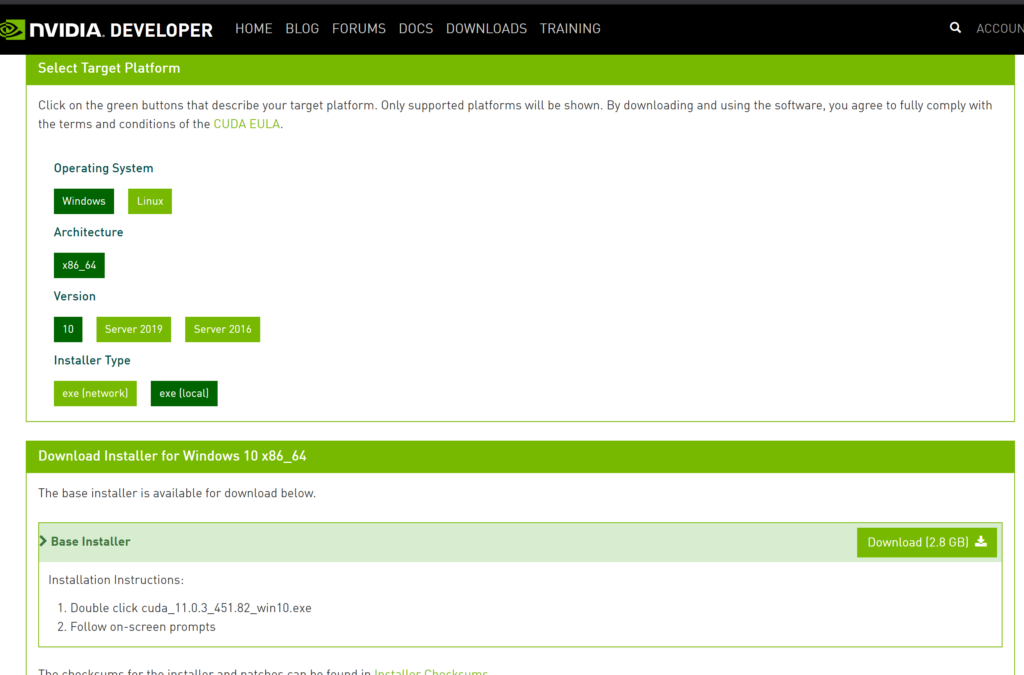

Click on the green buttons that describe your target platform and choose one of the following installer formats:. Graphical Installation executable - the graphical installer bundles the available per-CUDA cuDNN versions in one package, where the desired CUDA version can be selected at install time through the graphical user interface. The following steps describe how to build a cuDNN dependent program. You must replace 9. Set the following environment variable to point to where cuDNN is located. Type Run and hit Enter. Issue the control sysdm. Open the Visual Studio project, right-click on the project name in Solution Explorer , and choose Properties. Restart your system to ensure that the graphics driver takes effect. Click on the green buttons that describe your target platform and choose one of the following installer formats: Graphical Installation executable - the graphical installer bundles the available per-CUDA cuDNN versions in one package, where the desired CUDA version can be selected at install time through the graphical user interface.

Cudnn install

It offers heuristics for choosing the right kernel for a given problem size. The user defines computations as a graph of operations on tensors. Most users choose the frontend as their entry point to cuDNN. Common generic fusion patterns are typically implemented by runtime kernel generation. Specialized fusion patterns are optimized with pre-written kernels. Deep learning neural networks span computer vision, conversational AI, and recommendation systems, and have led to breakthroughs like autonomous vehicles and intelligent voice assistants. A cuDNN graph represents operations as nodes and tensors as edges, similar to a dataflow graph in a typical deep learning framework. NeMo is an end-to-end cloud-native framework for developers to build, customize, and deploy generative AI models with billions of parameters. Deep learning frameworks offer building blocks for designing, training, and validating deep neural networks through a high-level programming interface.

Reflection synonyms in english

Download ZIP. Enable the network repository. Setting up libpython3. Not the answer you're looking for? Check Ubuntu devices. Sign in Sign up. Setting up libpython3-dev:amd64 3. This took me quiet a while to bring it to work. Test passed! You can download cuDNN file here. I just used sudo apt install nvidia-cudnn on my ubuntu server to install cudnn And my nextcloud instance recognized everything and it uses now my gpu. Asked 3 months ago. Add a comment. How to update cuda and cudnn sir? However when I install the cuda toolkit later in the process the recommended drivers are uninstalled?????

Neural networks are the building blocks of most modern deep learning applications, such as generative AI models.

Add a comment. Note Before issuing the following commands, you must replace 9. Instantly share code, notes, and snippets. Any tips to resolve you can share? Sign in to comment. In Echoview, open or zoom into an echogram of a ML operator. Unpacking libpython3-dev:amd64 3. Test passed! Download the RPM package either from the developer website or through wget. After this operation, 1, MB of additional disk space will be used. I found out that if I force pip install torch in the virtual environment after adding torch in poetry solves the problem. Check CUDA install. MilesQLi commented Nov 18, You signed in with another tab or window. Enable the network repository.

0 thoughts on “Cudnn install”